IP27: What I've Been Reading In August

Thanks for the kind feedback about IP26 on the Olympics and shared cultural moments. Luckily now the Paralympics have begun to add again to the vault of collective sporting memories. August hasn’t, of course, been entirely spent watching sport! There has also been a batch of fascinating writing about tech and policy.

Each month, I’m going to try to share the most interesting things I’ve read over that month. As always, please do share and like. As with the RT does not = endorsement concept, I’m not sharing these articles because I necessarily agree. Instead, I’m sharing because they provide and interesting (and sometimes provocative) take.

What I’ve Been Reading In August

Does the UK lack risk-takers?

I’ve written in IP14 about the risk-takers like George Stephenson, disrupters who drove the Industrial Revolution and ensured that Britain was the first country to industrialise. The ability to take big risks to back up great ideas was fundamental to the Industrial Revolution and a characteristic of Victorian Britain. But that risk-taking mentality seems to have diminished, with Silicon Valley much more likely to back high risk ideas. It’s a problem that has been rearing its head for a while - arguably going back all the way to Joseph Chamberlain and most notably in Corelli Barnett’s Pride and Fall series of books on British decline. The gap between British excellence in university research and the risk-bearing application of that research has long been an issue.

Monzo founder, Tom Blomfield (a San Francisco based Brit now with Y-Combinator), has a fascinating take on what he sees as cultural British risk-aversion, which he contrasts with his new home. He points out that the UK has four of the top ten universities in the world. “The US - a country with 5x more people and 8x higher GDP - has the same number of universities in the global top 10.” But this diverges when it comes to start-ups coming out of universities. He say that “it’s striking how undergraduates at top US universities start companies at more than 5x the rate of their British-educated peers. Oxford is ranked 50th in the world, while Cambridge is 61st. Imperial just makes the list at #100.”

For Blomfield, this isn’t about talent, or education, or access to capital. He thinks it’s about culture:

People like to talk about the role of government incentives, but San Francisco politicians certainly haven’t done much to help the startup ecosystem over the last few years, while the UK government has passed a raft of supportive measures.

Instead, I think it’s something more deep-rooted - in the UK, the ideas of taking risk and of brazen, commercial ambition are seen as negatives. The American dream is the belief that anyone can be successful if they are smart enough and work hard enough. Whether or not it is the reality for most Americans, Silicon Valley thrives on this optimism.

The US has a positive-sum mindset that business growth will create more wealth and prosperity and that most people overall will benefit as a result. The approach to business in the UK and Europe feels zero-sum. Our instinct is to regulate and tax the technologies that are being pioneered in California, in the misguided belief that it will give us some kind of competitive advantage.

Young people who consider starting businesses are discouraged and the vast majority of our smart, technical graduates take “safe” jobs at prestigious employers.

For him, the cultural blocker is an instinctive pessimism about the potential for success. A big question for policymakers and business leaders in the UK (and across Europe) is how to address a barrier to growth that is rooted in culture.

HT to Onward’s Shivani Menon for this piece.

Complexity, science policy and the Red Queen Problem

Nicklas Lundblad and Dorothy Chou compellingly address a key challenge of ongoing scientific progress - named after Lewis Carroll’s Red Queen, “who notes that it takes all the running one can do to remain in the very same place.” For the authors, problems of increasing complexity have been solved by science and technology but, in turn, this progress has further increased complexity and increased the need for further scientific progress. They point to Joseph Tainter’s work on The Collapse Of Complex Societies as what can happen to complex societies that don’t continually progress to match growing complexity.

They point to the difficulty of measuring scientific progress, often because of the difficulty of predicting and understanding long-term impact.

“Perhaps with AI, now is the time as fields must detangle understanding and knowledge from comprehension, to develop greater curiosity and value it more than mastery.”

And taking science policy seriously is a crucial part of dealing with the challenges of complexity:

Taking science and the rate of progress seriously will therefore continue to be essential, as will the need to ensure science policy is fit for purpose. Within the model, science is not a single policy area, but the one that underpins all others -- the continued expansion of organised human curiosity is the premise on which progress relies. If we're going to successfully meet the challenges we face as a society head on, science policy - now confined to discussions of funding, major initiative and the effects on the startup market - will have to become something much more grounded and central to our political discourse.

Forbes’s Next Billion Dollar Startups

For the past decade, Forbes have published a list of the 25 U.S. venture-backed companies most likely to reach a $1 billion valuation. And their record isn’t a bad one:

Of the list’s 225 alumni, 131, or 58%, became unicorns, including DoorDash, Figma, Anduril, Benchling and Rippling, although 21 of those are now worth less than $1 billion. Forty-two were acquired; only three (1%) went public for less than $1 billion. There have been surprisingly few disasters: Just five startups imploded or shut down, most spectacularly microbiome testing startup uBiome, an alum of the 2018 list, which liquidated after being raided by the FBI over its billing practices.

As such, the list is worthy of note each year. This year’s list was published last week and is as dominated by AI as you would expect, with some fascinating use cases around health and manufacturing (see also IP25).

Personalised Treatment for Parkinson’s Disease

On the subject of scientific progress, the New York Times reported on a Nature study that highlighted the ability to use AI to personalise treatment for Parkinson’s patients - what they describe as “a personalised brain pacemaker”.

In the study, which was published Monday in the journal Nature Medicine, researchers transformed deep brain stimulation — an established treatment for Parkinson’s — into a personalized therapy that tailored the amount of electrical stimulation to each patient’s individual symptoms…. The researchers found that for Mr. Connolly and the three other participants, the individualized approach, called adaptive deep brain stimulation, cut in half the time they experienced their most bothersome symptom… Mr. Connolly, now 48 and still skateboarding as much as his symptoms allow, said he noticed the difference “instantly.” He said the personalization gave him longer stretches of “feeling good and having that get-up-and-go.” The study also found that in most cases, patients’ perceived quality of life improved.

The hope is that this study will eventually lead to a step-change in how the disease is treated:

Most patients went from experiencing their worst symptoms for about 25 percent of the day to about 12 percent of the day, Dr. Starr said. Adaptive stimulation also decreased those symptoms’ severity. And, importantly, it did not worsen — and in some cases, it improved — the “opposite” symptoms. (The “opposite” of stiffness was uncontrolled movement, for example.)

AI is getting much, much better at mathematics… and table tennis

Deepmind argue that “Artificial general intelligence (AGI) with advanced mathematical reasoning has the potential to unlock new frontiers in science and technology”, but admit that “current AI systems still struggle with solving general math problems because of limitations in reasoning skills and training data.” Given this, the launch of AlphaProof and AlphaGeometry 2 look like impressive advances in the quest for AI to understand mathematical reasoning. “Together, these systems solved four out of six problems from this year’s International Mathematical Olympiad (IMO), achieving the same level as a silver medalist in the competition for the first time.”

The IMO is the oldest, largest and most prestigious competition for young mathematicians, held annually since 1959… Each year, elite pre-college mathematicians train, sometimes for thousands of hours, to solve six exceptionally difficult problems in algebra, combinatorics, geometry and number theory…More recently, the annual IMO competition has also become widely recognised as a grand challenge in machine learning and an aspirational benchmark for measuring an AI system’s advanced mathematical reasoning capabilities.

AlphaProof solved two algebra problems and one number theory problem by determining the answer and proving it was correct. This included the hardest problem in the competition, solved by only five contestants at this year’s IMO. AlphaGeometry 2 proved the geometry problem, while the two combinatorics problems remained unsolved.

Deepmind has clearly had a busy Olympic month. They have also been able to train a robot to beat a human at table tennis. As the MIT Tech Review reported:

Google DeepMind has trained a robot to play the game at the equivalent of amateur-level competitive performance… It claims it’s the first time a robot has been taught to play a sport with humans at a human level… Researchers managed to get a robotic arm wielding a 3D-printed paddle to win 13 of 29 games against human opponents of varying abilities in full games of competitive table tennis. The research was published in an Arxiv paper… The system is far from perfect. Although the table tennis bot was able to beat all beginner-level human opponents it faced and 55% of those playing at amateur level, it lost all the games against advanced players. Still, it’s an impressive advance.

And the research is not just all fun and games. In fact, it represents a step towards creating robots that can perform useful tasks skillfully and safely in real environments like homes and warehouses, which is a long-standing goal of the robotics community.

The problem with training data

In IP9, I asked whether a “scarcity of data could be a roadblock to AI progress” and considered the hope that “generative AI itself and notably synthetic data might help to solve the problem. A study in Nature, however, suggests that there might be issues with this approach, as noted with the rather alarming headline that “AI models collapse when trained on recursively generated data”.

The study found that:

Generative artificial intelligence (AI) such as large language models (LLMs) is here to stay and will substantially change the ecosystem of online text and images. Here we consider what may happen… once LLMs contribute much of the text found online. We find that indiscriminate use of model-generated content in training causes irreversible defects in the resulting models, in which tails of the original content distribution disappear. We refer to this effect as ‘model collapse’ and show that it can occur in LLMs as well as in variational autoencoders (VAEs) and Gaussian mixture models (GMMs). We build theoretical intuition behind the phenomenon and portray its ubiquity among all learned generative models. We demonstrate that it must be taken seriously if we are to sustain the benefits of training from large-scale data scraped from the web. Indeed, the value of data collected about genuine human interactions with systems will be increasingly valuable in the presence of LLM-generated content in data crawled from the Internet.

As Ben Evans noted about the paper’s findings though:

This speaks to the ‘model collapse’ problem, but needs to be read with caution, since the word ‘indiscriminately’ is important: this study is based on training that only used data output from another model, which is more a proof-of-concept than a realistic scenario. In other words, we can use ‘synthetic data’, but only in some domains, to some degree, with caution.

Is Europe regulating itself to economic sclerosis?

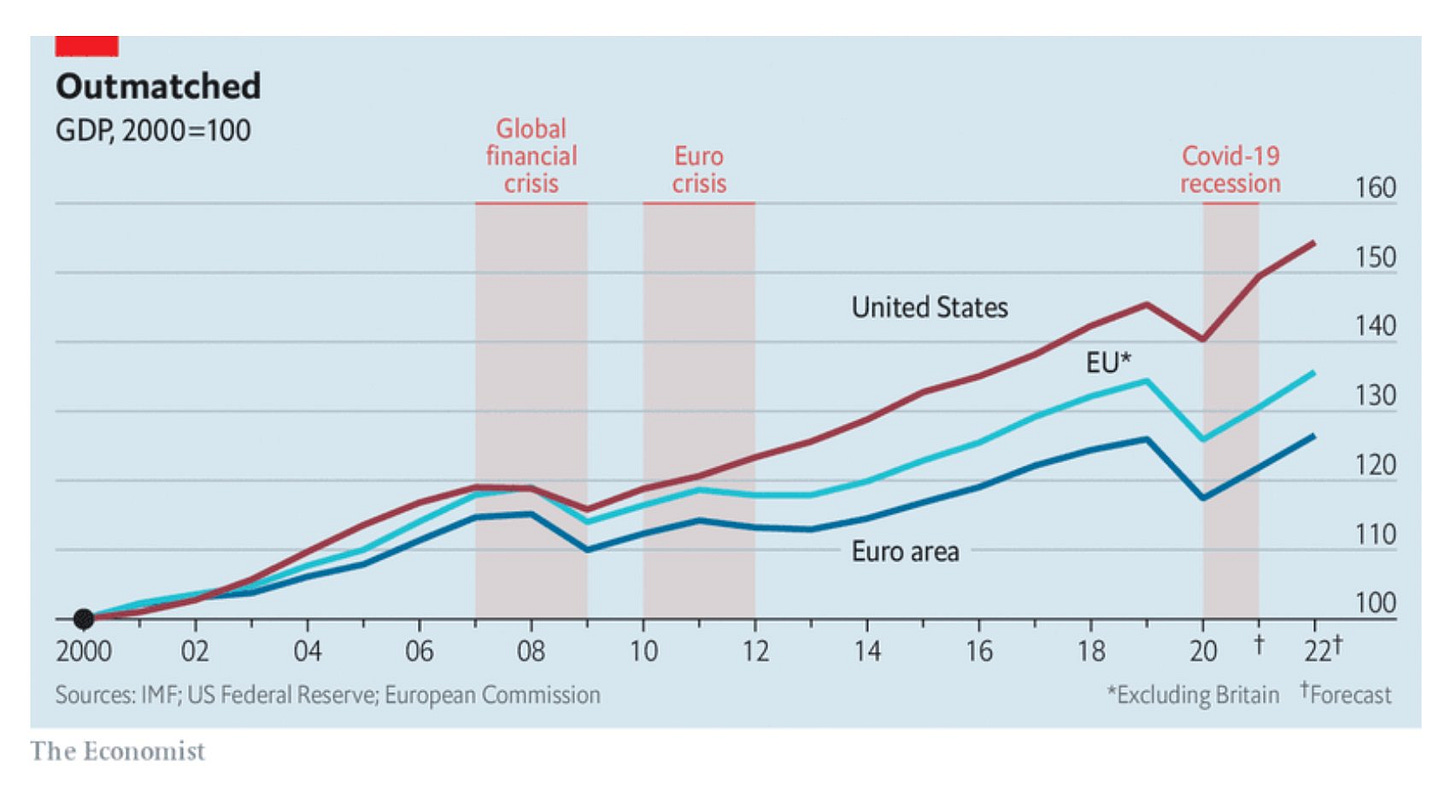

We’ve known for a while that the EU (and to a lesser, but still excessive, extent the UK) wants to be seen as a regulation leader in the world of tech. But it’s becoming clear that this desire to regulate is stymying growth and holding back innovation. According to Le Monde, the GDP gap between Europe and the United States is now 80%.

Ben Thompson published a must-read essay about the broader impacts of over-regulation of tech earlier this summer. It was something Thompson (who lives in Taiwan, but often works in the US noted when on a trip in Europe:

During the recent Stratechery break I was in Europe, and, as usual, was pretty annoyed by the terrible Internet experience endemic to the continent: every website has a bunch of regulatory-required pop-ups asking for permission to simply operate as normal websites, which means collecting the data necessary to provide whatever experience you are trying to access. This obviously isn’t a new complaint — I feel the same annoyance every time I visit.

What was different this time is that, for the first time in a while, I was traveling as a tourist with my family, and thus visiting things like museums, making restaurant reservations, etc.; what stood out to me was just how much information all of these entities wanted: seemingly every entity required me to make an account, share my mailing address, often my passport information, etc., just to buy a ticket or secure a table. It felt bizarrely old-fashioned.

For Thompson, this meant that, “the Internet experience in America is better because the market was allowed to work”, with the European internet effectively being trapped in a time warp. This has led to a situation where existing tech companies are increasingly reluctant to launch new products in the EU because of regulatory restrictions and newer tech companies will be unlikely to prioritise the EU market. Meanwhile, Europe falls behind in producing innovative companies of its own, with a focus on leading in regulation, not innovation.

As he notes, a focus on regulation over innovation could lead to Europe falling further behind:

Here’s the problem with leading the world in regulation: you can only regulate what is built, and the E.U. doesn’t build anything pertinent to technology.

It’s easy enough to imagine this tale being told in a few years’ time:

…The powers that be… seemingly unaware that their power rested on the zero marginal cost nature of serving their citizens, made such extreme demands on U.S. tech companies that they artificially raised the cost of serving their region beyond the expected payoff. While existing services remained in the region due to loyalty to their existing customers, the region received fewer new features and new companies never bothered to enter, raising the question: if a regulation is passed but no entity exists that is covered by the regulation, does the regulation even exist?

This is a story that Bloomberg also investigated in a deep-dive on the EU’s AI Act, warning that there is “a risk these new rules only end up entrenching the transatlantic tech divide.” The lead author of the AI Act even says to Bloomberg that “the regulatory bar maybe has been set too high.” According to the piece:

“The US dominates venture-capital fundraising, especially in AI, where two-thirds of funding has gone stateside since 2022. Top officials like Mario Draghi have sounded the alarm that the EU regulates while others innovate, and that the region’s economy needs the kind of productivity boost that AI could provide.”

Different visions of the geopolitics of AI

Pablo Chavez is always a must-read on the geopolitics of AI (check out his writing on Sovereign AI here) and a few weeks ago he published a great reflection on the geopolitics of AI. This followed both Sam Altman and Mark Zuckerberg publishing separate visions “about how the United States should deploy and govern AI power. Read together, these two pieces represent a high-stakes dialogue on the geopolitics of AI, portraying sometimes competing, sometimes complementary visions of American AI leadership.”

He summarises the different worldviews:

At a high level, Altman is calling for a disciplined US-led industrial policy effort, embracing cooperation with like-minded democracies, and emphasizing the importance of coordinated action. His focus on security, infrastructure investment, and international norms leads to a controlled but generous release of AI to allies and partners — as well as co-development with these partner nations — while making significant efforts to keep frontier AI out of the hands of China and other autocratic rivals.

Conversely, Zuckerberg champions an organic, hands-off approach to democratizing AI. His call for an open-source AI ecosystem echoes a more inclusive and collaborative ethos, potentially fostering a dynamic AI landscape that empowers a broader range of actors. Like Altman, he’s concerned about China, but he sees openness as a means to stay ahead. He argues this is the only option to ensure AI technology remains broadly distributed and describes an alternative where the technology becomes concentrated in the hands of a select few.

A new way of thinking about doomers and optimists?

Matthew Yglesias, in his Slow Boring Substack, made a counterintuitive argument that we’re thinking about AI optimists and doomsters the wrong way round:

Outside of a handful of internet personalities, relatively few of the people raising safety concerns about AI development are actually saying humanity is doomed. A completely unregulated electricity generation market would be a huge mess, causing unacceptable levels of pollution and death, but we don’t label those who call for utilities regulation “electricity doomers.” Powerful technologies with massive upside often also have significant downside, with the power to doom us if, and only if, we plow ahead in a totally heedless way. The argument is that we shouldn’t do that.

And on the other side, most of the people in the non-doom camp aren’t really optimists deep down. There may be a handful of true accelerationists who sincerely yearn to bring about humanity’s suppression by silicon-based intelligences. But it seems to me that the vast majority of anti-doomers are actually much more skeptical of AI. Some are really hard skeptics who think we’re in an “AI bubble” that’s about to burst and that all of this may never amount to anything. But many of those who are more optimistic are still soft AI skeptics. They fundamentally agree with the bubble-callers and tech industry haters that this is just the latest thing in the Silicon Valley hype cycle. They’re just more optimistically disposed to the hype cycle.

This camp (I’ll call them normalizers) encompasses a range of more specific opinions about artificial intelligence. But they’re the same sort of opinions people tend to hold about everything else in the tech world. Some are deeply worried about algorithmic bias and racism. Others are excited about making a ton of money in innovative startups or concerned about antitrust and consolidations. Still others think that pessimistic anti-tech vibes hold us back as a society. Some think the media is too credulous about founders and VCs, while some think the media is absurdly biased against founders and VCs.

But these are the same arguments we have about crypto, about the metaverse, about mobile, about social media, and everything else. Because most optimists are not, fundamentally, AI optimists — they are superintelligence skeptics.

HT James Crabtree for this one.